How to perform a link audit on your site:

- Collect data from search console, aHrefs and Majestic

- Upload all data to Kerboo

- Allow Kerboo to recrawl and score all links

- Manually review and mark for removal

Links auditing and penalty recovery is something we have a 100% success rate for our clients, but that rarely leaves us time to take care of our own aged domain portfolio. Better get this cleaned up.

Making a start on the SEO penalty recovery

I acted on the adult directory list immediately – in fact, I found the company that owned the directories (based in Hyderabad, India) and paid the owner a small sum to remove all 2,000 links, but never really bothered about what had happened with Directory Maximiser. We migrated domains a couple of years later, and I thought no more of SEOgadget.co.uk’s plight.

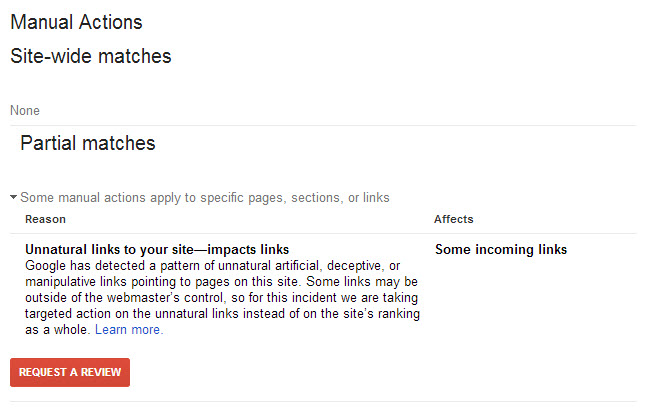

Until earlier this year. When I was writing about using change of address in Webmaster Tools, I found this:

Oops.

What a terrific opportunity for a case study, though. I just happened to be writing a presentation on the subject of penalty recovery and link auditing, so I thought I’d talk about my experience getting this sorted.

There’s an obvious caveat here: you’d want to avoid a penalty entirely by getting a disavow file submitted to Search Console ahead of time, though it’s still good fun to get my sleeves rolled up and get a manual action revoked (even though I have several teams that could have done this for me).

OK, I want to create a disavow quickly and effectively. What tools should I be using, and what data points should I care about?

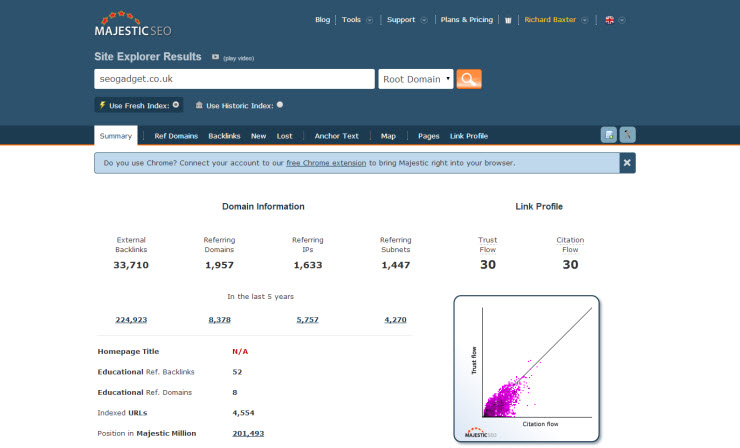

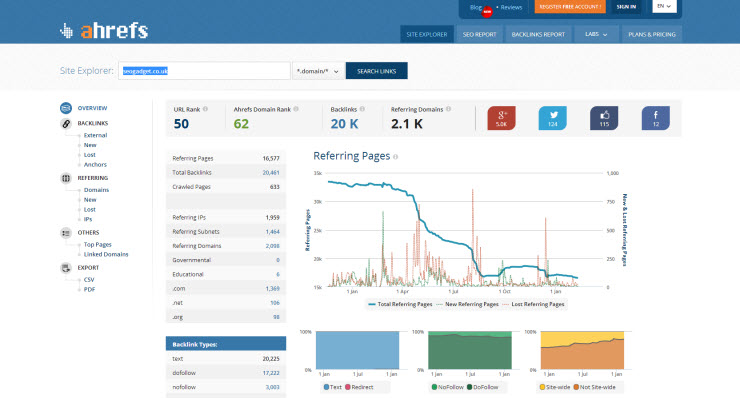

Firstly, the data tools: for me it’s MajesticSEO, aHrefs and Search Console for the raw link data export:

Majestic’s Fresh index has a reliable re-crawl rate (of around 90 days). Trust and Citation Flow are really interesting metrics – any large difference between the two can be a positive indicator that something isn’t right. The Historic index is *massive* but quite difficult to work with and very noisy. I asked around and most of us agreed that unless they’re working with the API we rarely touch the historic index these days.

AHrefs is the rising star in our organisation. They seem to have a pretty solid UI and a fast discovery rate for new links. This makes initial assessments really easy, particularly when we’re looking at charts that may indicate a domain has been attacked or has ramped up their link acquisition efforts.

Finally, if I only had one tool, it would have to be Google’s Search Console. In 2012 I took a look at who had the deepest and most diverse crawl (and consequently, who had the best link data). It was Search Console. It is still Search Console.

Open Site Explorer gets an honourable mention but for link auditing, it’s a no from me. The depth of data you need for this type of analysis isn’t provided, though for reporting on good quality links, and metrics like Domain Authority, OSE is occasionally something we’d use.

Then we have consolidation tools, tools that don’t generate link index data per se, but aid in the collection, consolidation, analysis or action stages of an audit:

Kerboo is a really remarkable new entrant to the space. It’s the greatest time saver (especially if you’re an Agency SEO with multiple small to medium size site profiles to manage).

You can segment links by anchor text, PageRank, risk, status, site wides, etc). It connects to all of the major services to extract link data: Search Console, Majestic and aHrefs. Awesome. With a bit of perseverance, you can build a decent disavow file and get the outreach process started for link removal. We’ve built our own stuff for larger projects but I must say – if you’re in-house and don’t have a huge amount of resource to reinvent the wheel, don’t!

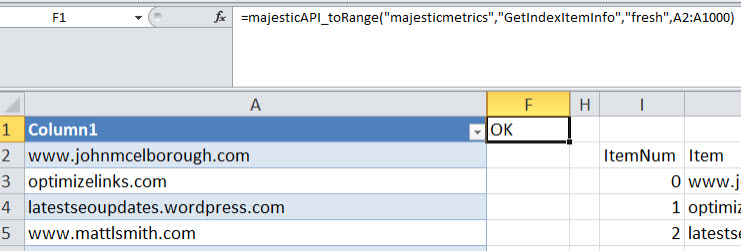

Our own SEOgadget for Excel connects to aHrefs, Majestic, Moz, Grepwords and SEMrush to speed up the process of fetching data. In this use case, I recommend you giving the =GetIndexItemInfo() command from the MajesticSEO API a whirl – that’s the API call to get all the Majestic Metrics into a spreadsheet, including Citation Flow and Trust Flow.

What data points are we looking to collect?

Completeness is everything. We build as diverse data set as possible, mostly because there is *always* some debate with a client on the greyer areas of their link-building – we need to take a hard and fast approach to this so having a decent case as to why it needs to go is a good thing:

- Ahrefs, Majestic, WMT

- Destination URL & HTTP status

- Anchor text

- PageRank (domain / URL / Fake check)

- IP address

- Follow/Nofollow

- No. referring domains

- Live / not live

- Page indexation

- Pages with malicious / explicit content

- Page title & meta description

What are you looking out for?

These are the sorts of things you’re looking out for; it’s not an exhaustive list but there are some pretty big tell tale signs in here:

- Site-wide links

- Links from penalised domains

- Odd PageRank distribution curve (eg: lots of PR0/1’s)

- Links with exact match keyword anchors

- Links from pages with malicious / explicit content

- Links from directories / article directories

- Lots of domains on the same C-block IP

- Anchor text distribution – brand vs too much non-brand

- Hidden do follow comment spam (for example under disqus plugin)

- Links from known software submitters like GSA

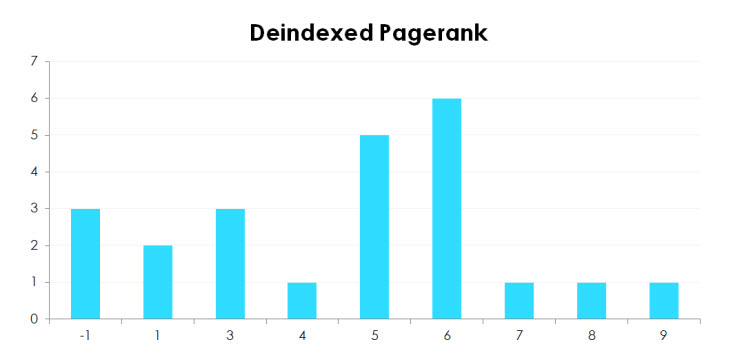

One of the data points I really find interesting on suspicious inbound links is when the link or the linking domain has been removed from Google’s index, but it still has a PageRank value assigned:

None of these data points alone are 100% reliable but if I’m going to draw a hard and fast (and reasonably aggressive) view of what’s got to be disavowed, domains that are no longer indexed is a pretty big red flag to me.

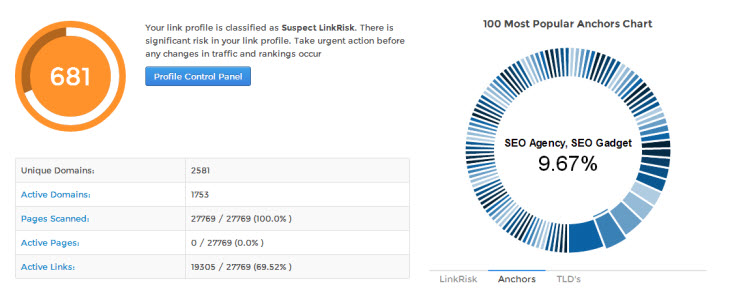

Obviously, site wides are easy to spot (and identify in aHrefs, WMT and Link Risk) and with LinkRisk, anchor text footprints from things like directory submission are really easy to grab hold of:

Side note: the de-dupe test

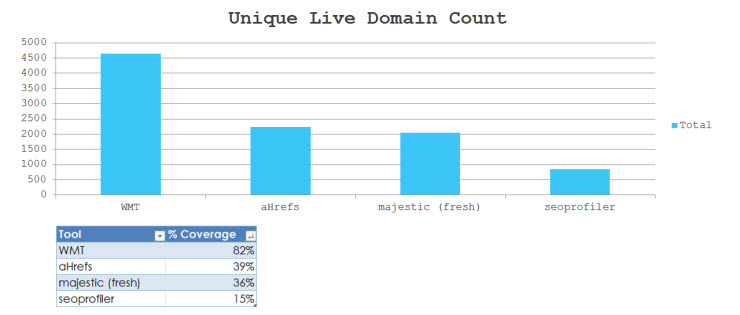

Which tool offers the most diverse link data? By diverse I mean – the most number of pages crawled per domain and the most unique domains. Back in 2012, it was Search Console. It still is!

I download everything a tool’s got to give (individual URLs linking to my site). Then, I extract the unique domain names and de-duplicate the results. That gives me a pretty good feel for the diversity of the data contained in each service.

Consolidating all exported data gave us 5672 unique domains linking to SEOgadget.co.uk. That means it’s a trivial matter to understand how much of a contribution each tool would make to your process. The “coverage” score looks at how many of the domains were found in each export – so most of it comes from Search Console.

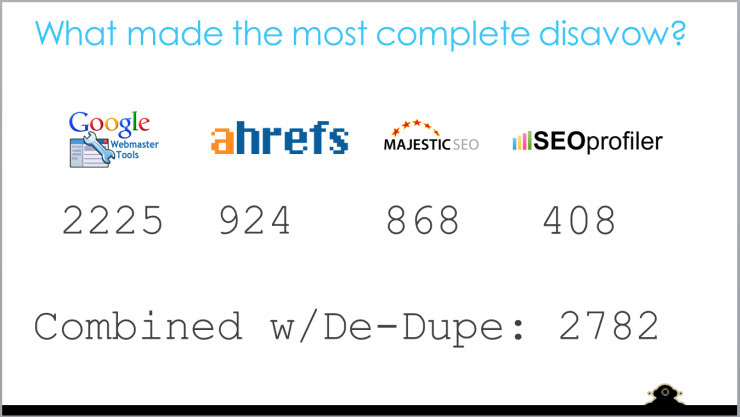

From that point I created a manually reviewed disavow list, firstly as if I was only working with one tool, and secondly as a consolidated “master” list:

I still feel like it’s worth having Majestic and aHrefs – they seem to be able to find links that Google does not report, although the volume and diversity of unique domains coming from Google vastly outperforms the others.

A word on disavow (and what to remove)

Don’t take any stage in this process lightly.

When Cyrus submitted all of his links via disavow, he permanently lost all of his rankings. Review your links thoroughly – submit a *good* link and it’ll never give you PageRank again.

Should links be removed, disavowed, or both?

It depends on the severity of what you were doing. If it’s all directory links and simple, stupid stuff that isn’t terribly extreme, a disavow will do. If it’s paid links, if you’re on an outed network, if it’s something you could make a webmaster analyst look stupid for if you blogged about it after re-inclusion, it needs removing. And on that note, the worst thing you could ever, ever do is *say* you’ve stopped doing something when you very obviously have not. Google can see what you’re up to!

Anyway, the conclusion to my story

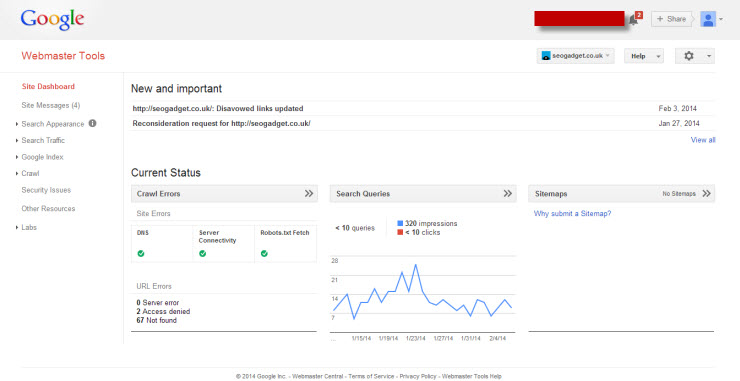

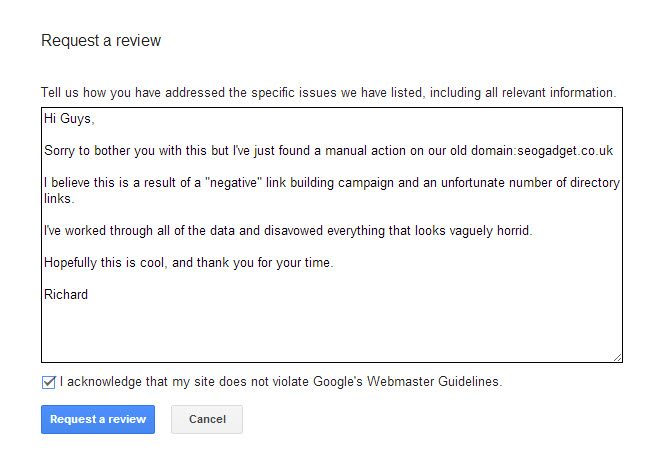

Here’s my re-inclusion request after uploading my new disavow file (with 2,378 domains!) . Note, I haven’t written War and Peace, I haven’t outed anyone (honestly, I’ve seen some re-inclusion requests that are several pages long, out every agency the brand has ever worked with – not necessary guys), I’ve just been nice, honest and to the point:

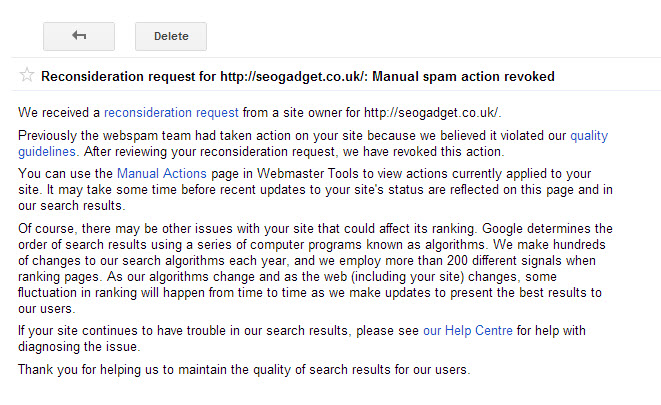

And then this happened, just in time for my presentation at SES (*wipes brow*):

The moral of the story?

Link auditing needs hard and fast rules to work. There are flags that you should simply not ignore, at least, if you do, ignore them at your peril. If you have a penalty, you’ve got to be aggressive. Obviously, the situation described was quite mild but it still took a very large disavow file, lots of data integrity and detailed manual review time to get it right. If the backlink profile of a site isn’t too dirty, then a complete disavow of anything vaguely awful will be fine. You’ve got to be very, very thorough, though.

As an aside: I’m not sure why SEOs wait for a penalty before starting the clean up process, if you’ve got lame links in your profile, disavow them now!

If you’ve been very aggressive in your link tactics, bought network links, offered product in exchange for exact match anchors, been on some of the very high profile paid networks, especially the really bad ones, then they’re going to insist on you cleaning up after yourself.

And finally, on what makes for a bad link – if you find yourself debating whether a link is good or bad, it’s definitely bad.

Chris Gedge

Short and polite reclusion requests for the win! I was exactly the same situation. Used disavow file ONLY and a nice, short re-inclusion request and recovered within 2 weeks :)

Darren Jamieson

Thanks for the level of detail on this. It made for interesting reading. Like you, I seem to enjoy going into the bowels of an old link profile and finding some of the seriously dodgy links which crop up. I’m still amazed to this day the level of bad linking done by some people.

Alex Ivanovs

Hey,

I’ve included you in my weekly marketing roundup,

https://codecondo.com/online-marketing-weekly-roundup-feb-8-feb-14-2014/

Thank you,

Alex

Richard Baxter

@Alex, thanks buddy – just tweeted your roundup!

Casey Markee

“Review your links thoroughly – submit a *good* link and it’ll never give you PageRank again.”

Not true. Cyrus has a great write-up…but it’s almost a year old and many of his case details have chanced. Further, it’s understand more clearly now that domains in a Disavow File only have the invisible nofollow AS LONG AS THEY ARE IN THE DISAVOW FILE. If you remove a domain from your file, such as uploading and saving over your original file with a new file that has original domains removed, those domains will no longer be subject to the “invisible nofollow.”

There is no evidence, none, that once a domain is included in the disavow file it’s on a site specific blacklist forever. Far from it. In fact, we’ve found just the opposite.

Other than the above, great quality stuff.

Cyrus Shepard

Casey, interesting point of view. I would love to see a case study on this! I hope you are right, although I haven’t seen enough evidence to believe one way or another. I still advise webmasters to be very careful about what they disavow, because there is a risk of losing those links for good. I can’t prove this, but I’d need some proof in the other direction before I was convinced.

(and by the way, I don’t pretend to know anything about the disavow function. the more I think I understand, the more confusing it gets :)

Hayden Bray

I realize that Google has a monumentally challenging task on their hands of organizing the information of the entire internet. But the repeated craziness of following one of Google’s recommended practices and then somehow down the road finding out that their algorithms have changed and you’re being penalized and need to do lots of work (with limited guidance on whether or not an action will be positive) is a very frustrating experience. I’m not a huge fan of most types of social media and this over-sharing complex built into some people, but I hope that Google starts being challenged more and more and is forced to improve. Facebook is starting to try and make some inroads into search, they’ve got a ton of businesses on board and a pretty big third party ecosystem (see https://www.facebooklikesreviews.com for instance), and more social data than anybody. If they can provide some legitimate competition in search, it might motivate Google to provide better tools for dealing with some of this stuff and even better algorithms to avoid forcing webmasters to do their own work for them.